Design and Testing

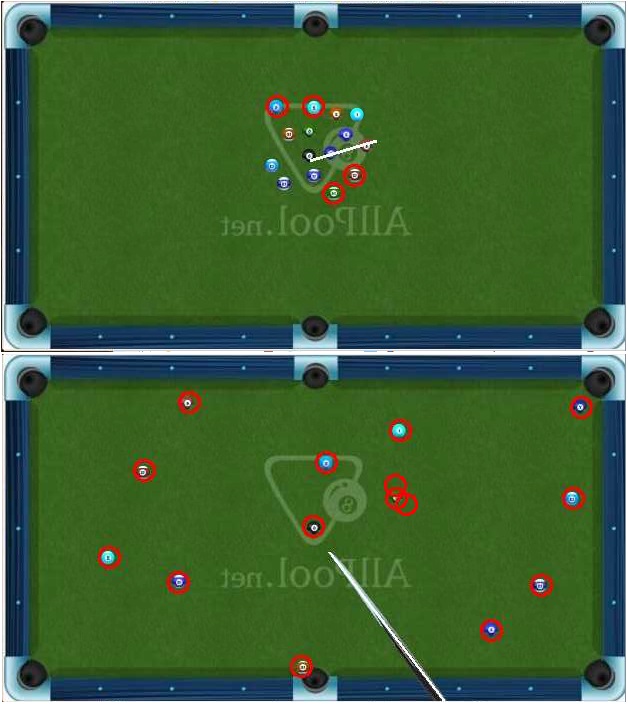

The first step for our project was accurate detection of pool table elements. Given a pool scenario, in order to project the trajectories properly, the Pi had to know where the balls were located. Our first attempt was to use the OpenCV function HoughCircles to accomplish this. HoughCircles takes an input image and a number of detection parameters, and returns where it detects circles within the image. After a very long period of tuning, we were able to get accurate detection of the balls within a single image (Figure 1). However, we discovered that every time we changed the image (if the balls changed in number or location), we would have to retune the parameters, which was impractical for our planned design since it is supposed to work with any given scenario.

The next attempt was to use contour detection. First, we would take a baseline pool table image, and add features like balls and the cue stick onto the image. Then, we subtract the baseline from the modified image to give a result where only the added images are visible. After taking the grayscale image of the result, cv2.findContours would find where the objects were and draw contours around them (Figure 2).

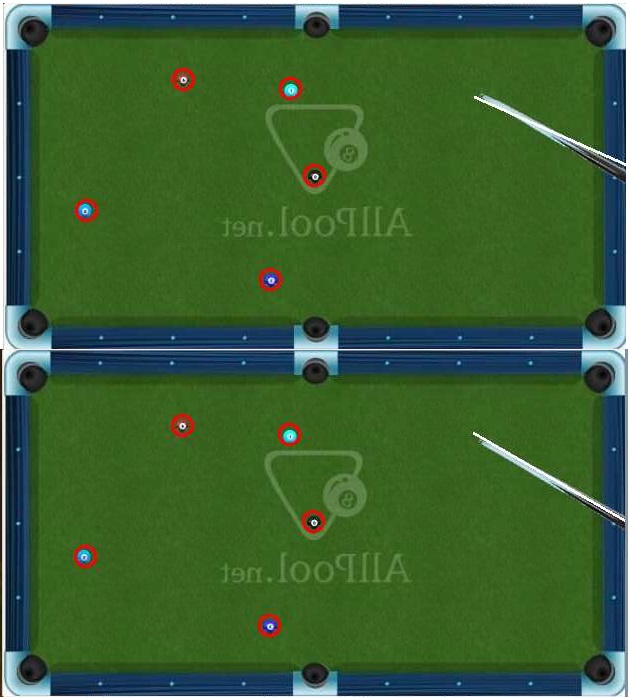

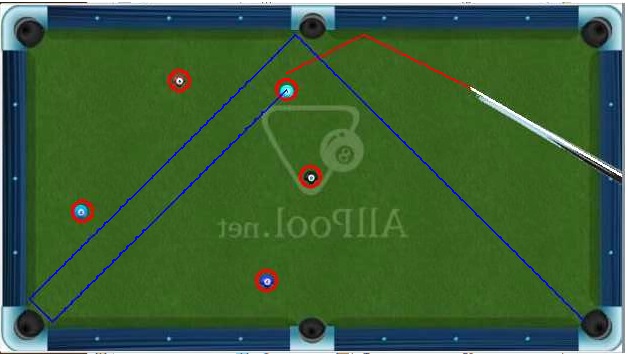

We then process the contours to determine if a given contour is a ball or a cue based on the contour size. By using cv2.minEnclosingCircle() on each contour, we can obtain the (x,y) positions as well as the radius of the smallest enclosing circle for each contour. Based on the radius size, it becomes easy to differentiate between the ball and the cue. We then draw either a circle for balls or a line for the cue on the input image based on the obtained (x,y) positions. Keeping track of the ball and cue positions, we move the image into PyGame, which is where the rest of our functionality comes in. In an image with the cue, we take the endpoints of the contour-based line, and use it as our predicted cue trajectory. Pressing ENTER will then produce a projection line based on the predicted trajectory, simulating the path of a cue ball hit straight on by the cue (Figure 3). If this line collides with any balls on the table, we then use a hard-ball dynamics scheme to determine the collision angle, and the direction that the collided ball will travel.

To determine the angle of the shot to take based on the position of the cue, the change in position along each axis of the image is determined by subtracting the final position from the initial position. Using these two components of the vector we treated as the pool cue, we were able to determine the angle made by the cue in reference to the vertical axis. Based on this angle information, we found the magnitude of the velocity of the cue ball along each axis by multiplying the magnitude of the velocity of the ball by the cosine and sine of the angle previously determined. Using this velocity information, we were able to simulate the cue ball moving along the screen and colliding with objects.

Our dynamics scheme is based on the model given on Professor Bruce Land’s ECE4760 page (see References and Code Snippet 2 in Appendix below). The projections are timestep-based, where in each iteration of the loop the projection moves forward slightly at a certain “velocity”; the balls begin at rest. In each timestep, the ballCollision function loops through each known ball, and if the current trajectory would cause a collision with a ball in the current timestep, then it would return the index of that ball. Given the index, the main loop can recognize that there is a collision, and from the (x,y) position of the collided ball, can calculate the radial distance and “velocity” difference between the current position and the collided ball. Then, that information is combined to form the dot product between distance and velocity as well as the squared distance magnitude. The dot product multiplied by the x (y) distance divided by the magnitude gives change in x (y) velocity. There is an important point to highlight here: after a collision with a ball, the collided ball is the new “tracked” ball, and the trajectory of the previous ball is no longer tracked. Thus in the code we remove the collided ball from the array of tracked balls. This is mainly to prevent the issue of multiple overlapping collisions; normally when using this dynamics model after each collision there must be several timesteps where collisions are ignored in order to prevent collision overlap issues. The method we use circumvents that problem with minimal impact to the resulting simulation.

In addition to the projection line from the cue, we also added the functionality of placing a cue anywhere on the table. By clicking on two points, a virtual cue is placed between those points, and hitting ENTER will give the projection of the shot from the virtual cue. This would allow players to visualize shots from any position and any angle on the table, and allow us to give projections for images where a cue is not present within the image (Figure 4).

Our last added functionality is an additional mode where velocity, shot power, and friction are taken into account. After determining the cue position, the shot power can be input (between 1 and 10), which determines the starting velocity of the cue ball projection. With each animation timestep, the velocity decreases by a “friction” factor, and the simulation ends when the velocity has decreased such that the ball no longer seems like it is moving. The purpose of this mode is to be able to more closely simulate the real-life movement of the balls across the pool table, since shot power and friction are very important physical aspects that players must take into account.